目录

一、播放rtsp协议流二、播放webrtc协议流三、音视频实时通讯总结一、播放rtsp协议流

如果 webrtc 流以 rtsp 协议返回,流地址如:rtsp://127.0.0.1:5115/session.mpg,uniapp的<video>编译到android上直接就能播放,但通常会有2-3秒的延迟。

二、播放webrtc协议流

如果 webrtc 流以 webrtc 协议返回,流地址如:webrtc://127.0.0.1:1988/live/livestream,我们需要通过sdp协商、连接推流服务端、搭建音视频流通道来播放音视频流,通常有500毫秒左右的延迟。

封装 WebrtcVideo 组件

<template><video id="rtc_media_player" width="100%" height="100%" autoplay playsinline></video></template><!-- 因为我们使用到 js 库,所以需要使用 uniapp 的 renderjs --><script module="webrtcVideo" lang="renderjs">import $ from "./jquery-1.10.2.min.js";import {prepareUrl} from "./utils.js";export default { data() { return { //RTCPeerConnection 对象 peerConnection: null, //需要播放的webrtc流地址 playUrl: 'webrtc://127.0.0.1:1988/live/livestream' } }, methods: { createPeerConnection() { const that = this //创建 WebRTC 通信通道 that.peerConnection = new RTCPeerConnection(null); //添加一个单向的音视频流收发器that.peerConnection.addTransceiver("audio", { direction: "recvonly" });that.peerConnection.addTransceiver("video", { direction: "recvonly" });//收到服务器码流,将音视频流写入播放器 that.peerConnection.ontrack = (event) => { const remoteVideo = document.getElementById("rtc_media_player"); if (remoteVideo.srcObject !== event.streams[0]) { remoteVideo.srcObject = event.streams[0]; } }; }, async makeCall() {const that = this const url = this.playUrl this.createPeerConnection() //拼接服务端请求地址,如:http://192.168.0.1:1988/rtc/v1/play/ const conf = prepareUrl(url); //生成 offer sdp const offer = await this.peerConnection.createOffer(); await this.peerConnection.setLocalDescription(offer); var session = await new Promise(function (resolve, reject) { $.ajax({ type: "POST", url: conf.apiUrl, data: offer.sdp, contentType: "text/plain", dataType: "json", crossDomain: true, }) .done(function (data) { //服务端返回 answer sdp if (data.code) {reject(data);return; } resolve(data); }) .fail(function (reason) {reject(reason); }); }); //设置远端的描述信息,协商sdp,通过后搭建通道成功 await this.peerConnection.setRemoteDescription( new RTCSessionDescription({ type: "answer", sdp: session.sdp }) );session.simulator = conf.schema + '//' + conf.urlObject.server + ':' + conf.port + '/rtc/v1/nack/'return session; } }, mounted() { try {this.makeCall().then((res) => {// webrtc 通道建立成功}) } catch (error) { // webrtc 通道建立失败 console.log(error) } }}</script>utils.js

const defaultPath = "/rtc/v1/play/";export const prepareUrl = webrtcUrl => {var urlObject = parseUrl(webrtcUrl);var schema = "http:";var port = urlObject.port || 1985;if (schema === "https:") {port = urlObject.port || 443;}// @see https://github.com/rtcdn/rtcdn-draftvar api = urlObject.user_query.play || defaultPath;if (api.lastIndexOf("/") !== api.length - 1) {api += "/";}apiUrl = schema + "//" + urlObject.server + ":" + port + api;for (var key in urlObject.user_query) {if (key !== "api" && key !== "play") {apiUrl += "&" + key + "=" + urlObject.user_query[key];}}// Replace /rtc/v1/play/&k=v to /rtc/v1/play/?k=vvar apiUrl = apiUrl.replace(api + "&", api + "?");var streamUrl = urlObject.url;return {apiUrl: apiUrl,streamUrl: streamUrl,schema: schema,urlObject: urlObject,port: port,tid: Number(parseInt(new Date().getTime() * Math.random() * 100)).toString(16).substr(0, 7)};};export const parseUrl = url => {// @see: http://stackoverflow.com/questions/10469575/how-to-use-location-object-to-parse-url-without-redirecting-the-page-in-javascrivar a = document.createElement("a");a.href = url.replace("rtmp://", "http://").replace("webrtc://", "http://").replace("rtc://", "http://");var vhost = a.hostname;var app = a.pathname.substr(1, a.pathname.lastIndexOf("/") - 1);var stream = a.pathname.substr(a.pathname.lastIndexOf("/") + 1);// parse the vhost in the params of app, that srs supports.app = app.replace("...vhost...", "?vhost=");if (app.indexOf("?") >= 0) {var params = app.substr(app.indexOf("?"));app = app.substr(0, app.indexOf("?"));if (params.indexOf("vhost=") > 0) {vhost = params.substr(params.indexOf("vhost=") + "vhost=".length);if (vhost.indexOf("&") > 0) {vhost = vhost.substr(0, vhost.indexOf("&"));}}}// when vhost equals to server, and server is ip,// the vhost is __defaultVhost__if (a.hostname === vhost) {var re = /^(\d+)\.(\d+)\.(\d+)\.(\d+)$/;if (re.test(a.hostname)) {vhost = "__defaultVhost__";}}// parse the schemavar schema = "rtmp";if (url.indexOf("://") > 0) {schema = url.substr(0, url.indexOf("://"));}var port = a.port;if (!port) {if (schema === "http") {port = 80;} else if (schema === "https") {port = 443;} else if (schema === "rtmp") {port = 1935;}}var ret = {url: url,schema: schema,server: a.hostname,port: port,vhost: vhost,app: app,stream: stream};fill_query(a.search, ret);// For webrtc API, we use 443 if page is https, or schema specified it.if (!ret.port) {if (schema === "webrtc" || schema === "rtc") {if (ret.user_query.schema === "https") {ret.port = 443;} else if (window.location.href.indexOf("https://") === 0) {ret.port = 443;} else {// For WebRTC, SRS use 1985 as default API port.ret.port = 1985;}}}return ret;};export const fill_query = (query_string, obj) => {// pure user query object.obj.user_query = {};if (query_string.length === 0) {return;}// split again for angularjs.if (query_string.indexOf("?") >= 0) {query_string = query_string.split("?")[1];}var queries = query_string.split("&");for (var i = 0; i < queries.length; i++) {var elem = queries[i];var query = elem.split("=");obj[query[0]] = query[1];obj.user_query[query[0]] = query[1];}// alias domain for vhost.if (obj.domain) {obj.vhost = obj.domain;}};页面中使用

<template><VideoWebrtc /></template><script setup>import VideoWebrtc from "@/components/videoWebrtc";</script>

需要注意的事项:

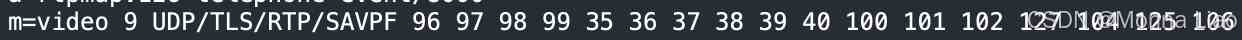

1.spd 协商的重要标识之一为媒体描述:m=xxx <type> <code>,示例行如下:

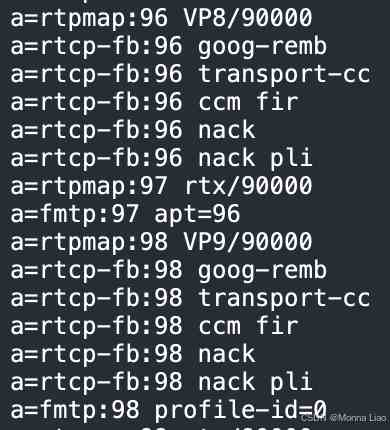

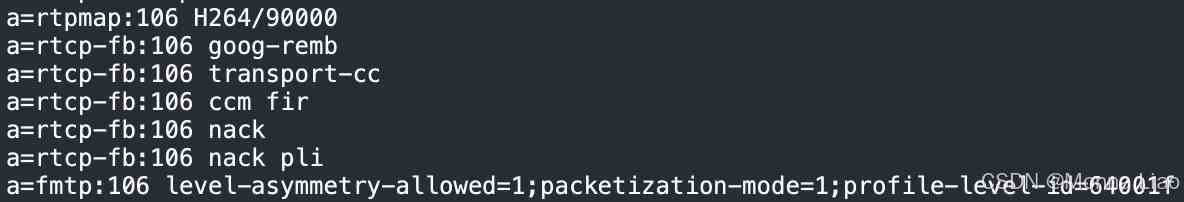

一个完整的媒体描述,从第一个m=xxx <type> <code>开始,到下一个m=xxx <type> <code>结束,以video为例,媒体描述包含了当前设备允许播放的视频流编码格式,常见如:VP8/VP9/H264 等:

对照 m=video 后边的编码发现,其包含所有 a=rtpmap 后的编码,a=rtpmap 编码后的字符串代表视频流格式,但视频编码与视频流格式却不是固定的匹配关系,也就是说,在设备A中,可能存在a=rtpmap:106 H264/90000表示h264,在设备B中,a=rtpmap:100 H264/90000表示h264。

因此,如果要鉴别设备允许播放的视频流格式,我们需要观察 a=rtpmap code 后的字符串。

协商通过的部分标准为:

offer sdp 的 m=xxx 数量需要与 answer sdp 的 m=xxx 数量保持一致;offer sdp 的 m=xxx 顺序需要与 answer sdp 的 m=xxx 顺序保持一致;如两者都需要将 m=audio 放在第一位,m=video放在第二位,或者反过来;answer sdp 返回的 m=audio 后的<code>,需要被包含在 offer sdp 的 m=audio 后的<code>中;在 sdp 中存在一项offer sdp 的 m=xxx 由 addTransceiver 创建,首个参数为 audio 时,生成 m=audio,首个参数为video时,生成 m=video ,创建顺序对应 m=xxx 顺序

"recvonly" }); that.peerConnection.addTransceiver("video", {direction: "recvonly" }); ```

a=mid:xxxxxx在浏览器中可能为audio、video,在 android 设备上为0、1,服务端需注意与 offer sdp 匹配。关于音视频流收发器,上面使用的api是addTransceiver,但在部分android设备上会提示没有这个api,我们可以替换为getUserMedia+addTrack:data() {return {...... localStream: null, ......}},methods: {createPeerConnection() {const that = this//创建 WebRTC 通信通道 that.peerConnection = new RTCPeerConnection(null); that.localStream.getTracks().forEach((track) => { that.peerConnection.addTrack(track, that.localStream); }); //收到服务器码流,将音视频流写入播放器 that.peerConnection.ontrack = (event) => { ...... };}, async makeCall() {const that = thisthat.localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true, });const url = this.playUrl............}}需要注意的是,

navigator.mediaDevices.getUserMedia获取的是设备摄像头、录音的媒体流,所以设备首先要具备摄像、录音功能,并开启对应权限,否则 api 将调用失败。

三、音视频实时通讯

这种 p2p 场景的流播放,通常需要使用 websocket 建立服务器连接,然后同时播放本地、服务端的流。

<template><div>Local Video</div><video id="localVideo" autoplay playsinline></video><div>Remote Video</div><video id="remoteVideo" autoplay playsinline></video></template><script module="webrtcVideo" lang="renderjs">import $ from "./jquery-1.10.2.min.js";export default {data() { return { signalingServerUrl: "ws://127.0.0.1:8085", iceServersUrl: 'stun:stun.l.google.com:19302', localStream: null, peerConnection: null } }, methods: { async startLocalStream(){ try { this.localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true, }); document.getElementById("localVideo").srcObject = this.localStream; }catch (err) { console.error("Error accessing media devices.", err); } }, createPeerConnection() { const configuration = { iceServers: [{ urls: this.iceServersUrl }]}; this.peerConnection = new RTCPeerConnection(configuration); this.localStream.getTracks().forEach((track) => { this.peerConnection.addTrack(track, this.localStream); }); this.peerConnection.onicecandidate = (event) => { if (event.candidate) { ws.send( JSON.stringify({ type: "candidate", candidate: event.candidate, }) ); } }; this.peerConnection.ontrack = (event) => { const remoteVideo = document.getElementById("remoteVideo"); if (remoteVideo.srcObject !== event.streams[0]) { remoteVideo.srcObject = event.streams[0]; } }; }, async makeCall() { this.createPeerConnection(); const offer = await this.peerConnection.createOffer(); await this.peerConnection.setLocalDescription(offer); ws.send(JSON.stringify(offer)); } }, mounted() { this.makeCall() const ws = new WebSocket(this.signalingServerUrl); ws.onopen = () => { console.log("Connected to the signaling server"); this.startLocalStream(); }; ws.onmessage = async (message) => { const data = JSON.parse(message.data); if (data.type === "offer") { if (!this.peerConnection) createPeerConnection(); await this.peerConnection.setRemoteDescription( new RTCSessionDescription(data) ); const answer = await this.peerConnection.createAnswer(); await this.peerConnection.setLocalDescription(answer); ws.send(JSON.stringify(this.peerConnection.localDescription)); } else if (data.type === "answer") { if (!this.peerConnection) createPeerConnection(); await this.peerConnection.setRemoteDescription( new RTCSessionDescription(data) ); } else if (data.type === "candidate") { if (this.peerConnection) { try { await this.peerConnection.addIceCandidate( new RTCIceCandidate(data.candidate) ); } catch (e) { console.error("Error adding received ICE candidate", e); } } } } }}</script>与播放webrtc协议流相比,p2p 以 WebSocket 替代 ajax 实现 sdp 的发送与接收,增加了本地流的播放功能,其他与播放协议流的代码一致。